Executing Change Data Capture to a Data Lake on Amazon S3

Executing Change Data Capture (CDC) to a data lake on Amazon S3 from a relational database necessitates that data should be handled at a record level. All the files have to be read, the necessary changes made, and complete datasets have to be rewritten as new files by the processing engine to enable operations such as inserting, updating, and deleting specific records from a dataset.

On the other

hand, when AWS CDC to S3 provides the

data in the data lake data in real-time, it is often fragmented over several

small files. The resultant poor query performance can be partially resolved

with Apache Hudi which is an open-source data management framework managing

data at the record level in Amazon S3. The result is that with AWS CDC to S3, building CDC pipelines becomes a simple process that

is optimized for streaming data ingestion.

You can also

build a Change Data Capture pipeline with AWS DMS to capture data from an

Amazon RDS for MySQL database. These changes may be applied to an Amazon S3

dataset with Amazon Hudi on Amazon EMR. Hudi automatically manages

checkpointing, rollback, and recovery, thereby eliminating the need to track

which data is being read or processed at the source.

The main reason

why using AWS

CDC to S3 is critical is

because it allows users of S3 to select the level of access which can be

low-cost restricted storage options or higher-priced unlimited storage space.

Moreover, millions of batch operations can be done with S3 while providing all

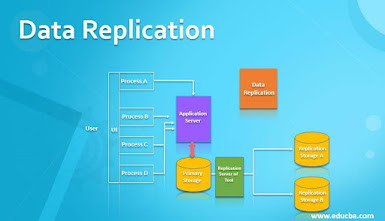

the benefits of the cloud such as data security and data replication.

Comments

Post a Comment